mirror of

https://github.com/bugsink/bugsink.git

synced 2025-12-21 13:00:13 -06:00

Merge branch 'main' into feature/add_dark_theme

This commit is contained in:

@@ -1,5 +1,14 @@

|

||||

# Changes

|

||||

|

||||

## 1.6.3 (27 June 2025)

|

||||

|

||||

* fix `make_consistent` on mysql (Fix #132)

|

||||

* Tags in `event_data` can be lists; deal with that (Fix #130)

|

||||

|

||||

## 1.6.2 (19 June 2025)

|

||||

|

||||

* Too many quotes in local-vars display (Fix #119)

|

||||

|

||||

## 1.6.1 (11 June 2025)

|

||||

|

||||

Remove hard-coded slack `webhook_url` from the "test this connector" loop.

|

||||

|

||||

@@ -1,16 +1,12 @@

|

||||

# Bugsink: Self-hosted Error Tracking

|

||||

|

||||

[Bugsink](https://www.bugsink.com/) offers [Error Tracking](https://www.bugsink.com/error-tracking/) for your applications with full control

|

||||

through self-hosting.

|

||||

|

||||

* [Error Tracking](https://www.bugsink.com/error-tracking/)

|

||||

* [Built to self-host](https://www.bugsink.com/built-to-self-host/)

|

||||

* [Sentry-SDK compatible](https://www.bugsink.com/connect-any-application/)

|

||||

* [Scalable and reliable](https://www.bugsink.com/scalable-and-reliable/)

|

||||

|

||||

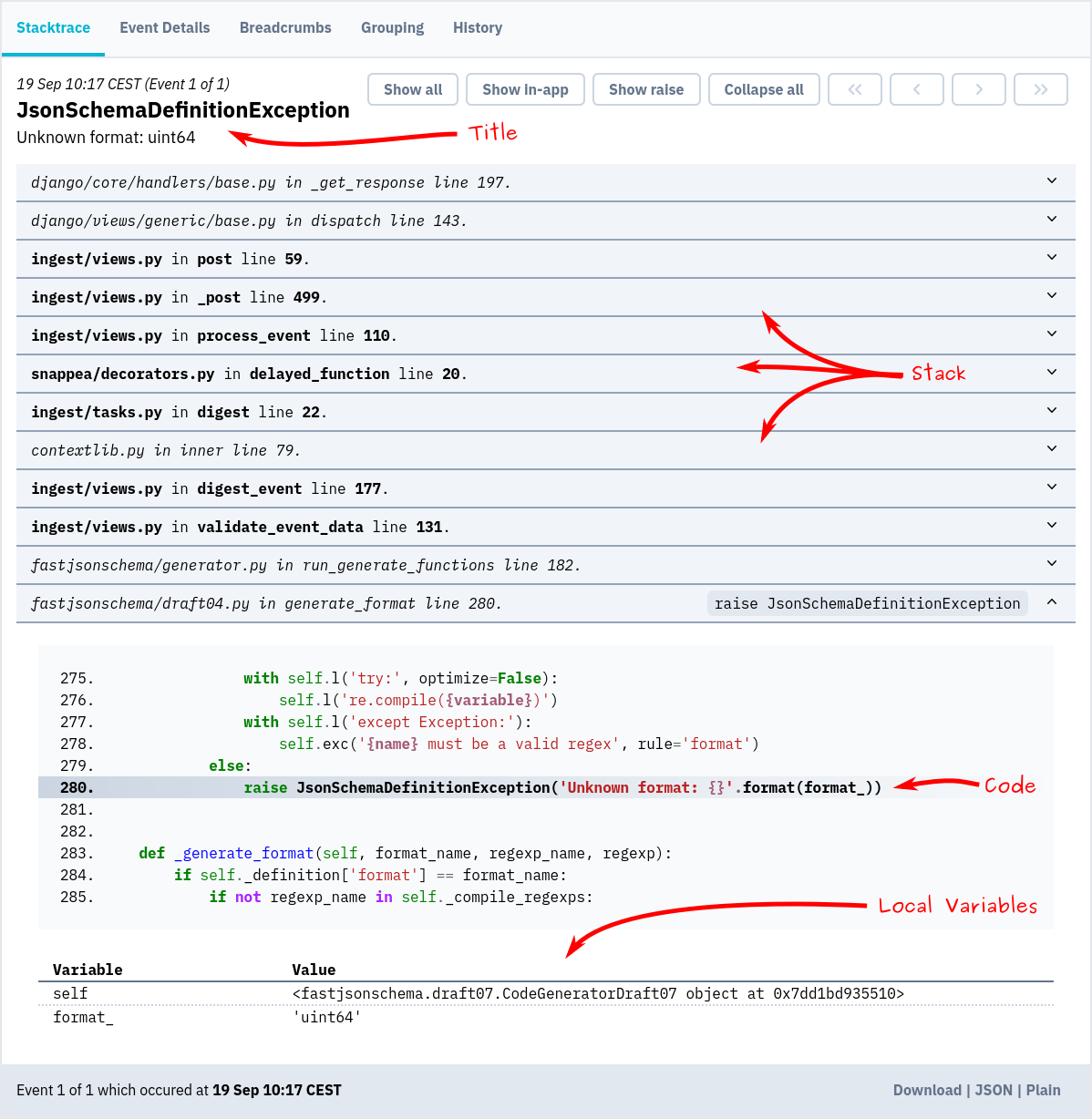

### Screenshot

|

||||

|

||||

This is what you'll get:

|

||||

|

||||

|

||||

|

||||

|

||||

@@ -22,7 +18,7 @@ The **quickest way to evaluate Bugsink** is to spin up a throw-away instance usi

|

||||

docker pull bugsink/bugsink:latest

|

||||

|

||||

docker run \

|

||||

-e SECRET_KEY={{ random_secret }} \

|

||||

-e SECRET_KEY=PUT_AN_ACTUAL_RANDOM_SECRET_HERE_OF_AT_LEAST_50_CHARS \

|

||||

-e CREATE_SUPERUSER=admin:admin \

|

||||

-e PORT=8000 \

|

||||

-p 8000:8000 \

|

||||

|

||||

@@ -0,0 +1,23 @@

|

||||

from django.db import migrations, models

|

||||

import django.db.models.deletion

|

||||

|

||||

|

||||

class Migration(migrations.Migration):

|

||||

|

||||

dependencies = [

|

||||

# Django came up with 0014, whatever the reason, I'm sure that 0013 is at least required (as per comments there)

|

||||

("projects", "0014_alter_projectmembership_project"),

|

||||

("alerts", "0001_initial"),

|

||||

]

|

||||

|

||||

operations = [

|

||||

migrations.AlterField(

|

||||

model_name="messagingserviceconfig",

|

||||

name="project",

|

||||

field=models.ForeignKey(

|

||||

on_delete=django.db.models.deletion.DO_NOTHING,

|

||||

related_name="service_configs",

|

||||

to="projects.project",

|

||||

),

|

||||

),

|

||||

]

|

||||

@@ -5,7 +5,7 @@ from .service_backends.slack import SlackBackend

|

||||

|

||||

|

||||

class MessagingServiceConfig(models.Model):

|

||||

project = models.ForeignKey(Project, on_delete=models.CASCADE, related_name="service_configs")

|

||||

project = models.ForeignKey(Project, on_delete=models.DO_NOTHING, related_name="service_configs")

|

||||

display_name = models.CharField(max_length=100, blank=False,

|

||||

help_text='For display in the UI, e.g. "#general on company Slack"')

|

||||

|

||||

|

||||

@@ -38,7 +38,7 @@ def check_event_storage_properly_configured(app_configs, **kwargs):

|

||||

@register("bsmain")

|

||||

def check_base_url_is_url(app_configs, **kwargs):

|

||||

try:

|

||||

parts = urllib.parse.urlsplit(get_settings().BASE_URL)

|

||||

parts = urllib.parse.urlsplit(str(get_settings().BASE_URL))

|

||||

except ValueError as e:

|

||||

return [Warning(

|

||||

str(e),

|

||||

|

||||

@@ -99,33 +99,39 @@ def get_snappea_warnings():

|

||||

def useful_settings_processor(request):

|

||||

# name is misnomer, but "who cares".

|

||||

|

||||

installation = Installation.objects.get()

|

||||

def get_system_warnings():

|

||||

# implemented as an inner function to avoid calculating this when it's not actually needed. (i.e. anything

|

||||

# except "the UI", e.g. ingest, API, admin, 404s). Actual 'cache' behavior is not needed, because this is called

|

||||

# at most once per request (at the top of the template)

|

||||

installation = Installation.objects.get()

|

||||

|

||||

system_warnings = []

|

||||

system_warnings = []

|

||||

|

||||

# This list does not include e.g. the dummy.EmailBackend; intentional, because setting _that_ is always an

|

||||

# indication of intentional "shut up I don't want to send emails" (and we don't want to warn about that). (as

|

||||

# opposed to QuietConsoleEmailBackend, which is the default for the Docker "no EMAIL_HOST set" situation)

|

||||

if settings.EMAIL_BACKEND in [

|

||||

'bugsink.email_backends.QuietConsoleEmailBackend'] and not installation.silence_email_system_warning:

|

||||

# This list does not include e.g. the dummy.EmailBackend; intentional, because setting _that_ is always an

|

||||

# indication of intentional "shut up I don't want to send emails" (and we don't want to warn about that). (as

|

||||

# opposed to QuietConsoleEmailBackend, which is the default for the Docker "no EMAIL_HOST set" situation)

|

||||

if settings.EMAIL_BACKEND in [

|

||||

'bugsink.email_backends.QuietConsoleEmailBackend'] and not installation.silence_email_system_warning:

|

||||

|

||||

if getattr(request, "user", AnonymousUser()).is_superuser:

|

||||

ignore_url = reverse("silence_email_system_warning")

|

||||

else:

|

||||

# not a superuser, so can't silence the warning. I'm applying some heuristics here;

|

||||

# * superusers (and only those) will be able to deal with this (have access to EMAIL_BACKEND)

|

||||

# * better to still show (though not silencable) the message to non-superusers.

|

||||

# this will not always be so, but it's a good start.

|

||||

ignore_url = None

|

||||

if getattr(request, "user", AnonymousUser()).is_superuser:

|

||||

ignore_url = reverse("silence_email_system_warning")

|

||||

else:

|

||||

# not a superuser, so can't silence the warning. I'm applying some heuristics here;

|

||||

# * superusers (and only those) will be able to deal with this (have access to EMAIL_BACKEND)

|

||||

# * better to still show (though not silencable) the message to non-superusers.

|

||||

# this will not always be so, but it's a good start.

|

||||

ignore_url = None

|

||||

|

||||

system_warnings.append(SystemWarning(EMAIL_BACKEND_WARNING, ignore_url))

|

||||

system_warnings.append(SystemWarning(EMAIL_BACKEND_WARNING, ignore_url))

|

||||

|

||||

return system_warnings + get_snappea_warnings()

|

||||

|

||||

return {

|

||||

# Note: no way to actually set the license key yet, so nagging always happens for now.

|

||||

'site_title': get_settings().SITE_TITLE,

|

||||

'registration_enabled': get_settings().USER_REGISTRATION == CB_ANYBODY,

|

||||

'app_settings': get_settings(),

|

||||

'system_warnings': system_warnings + get_snappea_warnings(),

|

||||

'system_warnings': get_system_warnings,

|

||||

}

|

||||

|

||||

|

||||

|

||||

@@ -39,7 +39,7 @@ def issue_membership_required(function):

|

||||

if "issue_pk" not in kwargs:

|

||||

raise TypeError("issue_pk must be passed as a keyword argument")

|

||||

issue_pk = kwargs.pop("issue_pk")

|

||||

issue = get_object_or_404(Issue, pk=issue_pk)

|

||||

issue = get_object_or_404(Issue, pk=issue_pk, is_deleted=False)

|

||||

kwargs["issue"] = issue

|

||||

if request.user.is_superuser:

|

||||

return function(request, *args, **kwargs)

|

||||

|

||||

@@ -91,15 +91,13 @@ SNAPPEA = {

|

||||

"NUM_WORKERS": 1,

|

||||

}

|

||||

|

||||

POSTMARK_API_KEY = os.getenv('POSTMARK_API_KEY')

|

||||

|

||||

EMAIL_HOST = 'smtp.postmarkapp.com'

|

||||

EMAIL_HOST_USER = POSTMARK_API_KEY

|

||||

EMAIL_HOST_PASSWORD = POSTMARK_API_KEY

|

||||

EMAIL_HOST = os.getenv("EMAIL_HOST")

|

||||

EMAIL_HOST_USER = os.getenv("EMAIL_HOST_USER")

|

||||

EMAIL_HOST_PASSWORD = os.getenv("EMAIL_HOST_PASSWORD")

|

||||

EMAIL_PORT = 587

|

||||

EMAIL_USE_TLS = True

|

||||

|

||||

SERVER_EMAIL = DEFAULT_FROM_EMAIL = 'Klaas van Schelven <klaas@vanschelven.com>'

|

||||

SERVER_EMAIL = DEFAULT_FROM_EMAIL = 'Klaas van Schelven <klaas@bugsink.com>'

|

||||

|

||||

|

||||

BUGSINK = {

|

||||

|

||||

@@ -8,6 +8,8 @@ import threading

|

||||

from django.db import transaction as django_db_transaction

|

||||

from django.db import DEFAULT_DB_ALIAS

|

||||

|

||||

from snappea.settings import get_settings as get_snappea_settings

|

||||

|

||||

performance_logger = logging.getLogger("bugsink.performance.db")

|

||||

local_storage = threading.local()

|

||||

|

||||

@@ -153,7 +155,7 @@ class ImmediateAtomic(SuperDurableAtomic):

|

||||

connection = django_db_transaction.get_connection(self.using)

|

||||

|

||||

if hasattr(connection, "_start_transaction_under_autocommit"):

|

||||

connection._start_transaction_under_autocommit_original = connection._start_transaction_under_autocommit

|

||||

self._start_transaction_under_autocommit_original = connection._start_transaction_under_autocommit

|

||||

connection._start_transaction_under_autocommit = types.MethodType(

|

||||

_start_transaction_under_autocommit_patched, connection)

|

||||

|

||||

@@ -183,9 +185,9 @@ class ImmediateAtomic(SuperDurableAtomic):

|

||||

performance_logger.info(f"{took * 1000:6.2f}ms IMMEDIATE transaction{using_clause}")

|

||||

|

||||

connection = django_db_transaction.get_connection(self.using)

|

||||

if hasattr(connection, "_start_transaction_under_autocommit"):

|

||||

connection._start_transaction_under_autocommit = connection._start_transaction_under_autocommit_original

|

||||

del connection._start_transaction_under_autocommit_original

|

||||

if hasattr(self, "_start_transaction_under_autocommit_original"):

|

||||

connection._start_transaction_under_autocommit = self._start_transaction_under_autocommit_original

|

||||

del self._start_transaction_under_autocommit_original

|

||||

|

||||

|

||||

@contextmanager

|

||||

@@ -206,10 +208,21 @@ def immediate_atomic(using=None, savepoint=True, durable=True):

|

||||

else:

|

||||

immediate_atomic = ImmediateAtomic(using, savepoint, durable)

|

||||

|

||||

# https://stackoverflow.com/a/45681273/339144 provides some context on nesting context managers; and how to proceed

|

||||

# if you want to do this with an arbitrary number of context managers.

|

||||

with SemaphoreContext(using), immediate_atomic:

|

||||

yield

|

||||

if get_snappea_settings().TASK_ALWAYS_EAGER:

|

||||

# In ALWAYS_EAGER mode we cannot use SemaphoreContext as the outermost context, because any delay_on_commit

|

||||

# tasks that are triggered on __exit__ of the (in that case, inner) immediate_atomic, when themselves initiating

|

||||

# a new task-with-transaction, will not be able to acquire the semaphore (it's not been released yet).

|

||||

# Fundamentally the solution would be to push the "on commit" logic onto the outermost context, but that seems

|

||||

# fragile (monkeypatching/heavy overriding) and since the whole SemaphoreContext is only needed as an extra

|

||||

# guard against WAL growth (not something we care about in the non-production setup), we just simplify for that

|

||||

# case.

|

||||

with immediate_atomic:

|

||||

yield

|

||||

else:

|

||||

# https://stackoverflow.com/a/45681273/339144 provides some context on nesting context managers; and how to

|

||||

# proceed if you want to do this with an arbitrary number of context managers.

|

||||

with SemaphoreContext(using), immediate_atomic:

|

||||

yield

|

||||

|

||||

|

||||

def delay_on_commit(function, *args, **kwargs):

|

||||

|

||||

162

bugsink/utils.py

162

bugsink/utils.py

@@ -1,7 +1,10 @@

|

||||

from collections import defaultdict

|

||||

from urllib.parse import urlparse

|

||||

|

||||

from django.core.mail import EmailMultiAlternatives

|

||||

from django.template.loader import get_template

|

||||

from django.apps import apps

|

||||

from django.db.models import ForeignKey, F

|

||||

|

||||

from .version import version

|

||||

|

||||

@@ -161,3 +164,162 @@ def eat_your_own_dogfood(sentry_dsn, **kwargs):

|

||||

sentry_sdk.init(

|

||||

**default_kwargs,

|

||||

)

|

||||

|

||||

|

||||

def get_model_topography():

|

||||

"""

|

||||

Returns a dependency graph mapping:

|

||||

referenced_model_key -> [

|

||||

(referrer_model_class, fk_name),

|

||||

...

|

||||

]

|

||||

"""

|

||||

dep_graph = defaultdict(list)

|

||||

for model in apps.get_models():

|

||||

for field in model._meta.get_fields(include_hidden=True):

|

||||

if isinstance(field, ForeignKey):

|

||||

referenced_model = field.related_model

|

||||

referenced_key = f"{referenced_model._meta.app_label}.{referenced_model.__name__}"

|

||||

dep_graph[referenced_key].append((model, field.name))

|

||||

return dep_graph

|

||||

|

||||

|

||||

def fields_for_prune_orphans(model):

|

||||

if model.__name__ == "IssueTag":

|

||||

return ("value_id",)

|

||||

return ()

|

||||

|

||||

|

||||

def prune_orphans(model, d_ids_to_check):

|

||||

"""For some model, does dangling-model-cleanup.

|

||||

|

||||

In a sense the oposite of delete_deps; delete_deps takes care of deleting the recursive closure of things that point

|

||||

to some root. The present function cleans up things that are being pointed to (and, after some other thing is

|

||||

deleted, potentially are no longer being pointed to, hence 'orphaned').

|

||||

|

||||

This is the hardcoded edition (IssueTag only); we _could_ try to think about doing this generically based on the

|

||||

dependency graph, but it's quite questionably whether a combination of generic & performant is easy to arrive at and

|

||||

worth it.

|

||||

|

||||

pruning of TagValue is done "inline" (as opposed to using a GC-like vacuum "later") because, whatever the exact

|

||||

performance trade-offs may be, the following holds true:

|

||||

|

||||

1. the inline version is easier to reason about, it "just happens ASAP", and in the context of a given issue;

|

||||

vacuum-based has to take into consideration the full DB including non-orphaned values.

|

||||

2. repeated work is somewhat minimalized b/c of the IssueTag/EventTag relationship as described below.

|

||||

"""

|

||||

|

||||

from tags.models import TagValue, IssueTag # avoid circular import

|

||||

|

||||

if model.__name__ != "IssueTag":

|

||||

return # we only prune IssueTag orphans

|

||||

|

||||

ids_to_check = [d["value_id"] for d in d_ids_to_check]

|

||||

|

||||

# used_in_event check is not needed, because non-existence of IssueTag always implies non-existince of EventTag,

|

||||

# since [1] EventTag creation implies IssueTag creation and [2] in the cleanup code EventTag is deleted first.

|

||||

used_in_issue = set(

|

||||

IssueTag.objects.filter(value_id__in=ids_to_check).values_list('value_id', flat=True)

|

||||

)

|

||||

unused = [pk for pk in ids_to_check if pk not in used_in_issue]

|

||||

|

||||

if unused:

|

||||

TagValue.objects.filter(id__in=unused).delete()

|

||||

|

||||

# The principled approach would be to clean up TagKeys as well at this point, but in practice there will be orders

|

||||

# of magnitude fewer TagKey objects, and they are much less likely to become dangling, so the GC-like algo of "just

|

||||

# vacuuming once in a while" is a much better fit for that.

|

||||

|

||||

|

||||

def do_pre_delete(project_id, model, pks_to_delete, is_for_project):

|

||||

"More model-specific cleanup, if needed; only for Event model at the moment."

|

||||

|

||||

if model.__name__ != "Event":

|

||||

return # we only do more cleanup for Event

|

||||

|

||||

from projects.models import Project

|

||||

from events.models import Event

|

||||

from events.retention import cleanup_events_on_storage

|

||||

|

||||

cleanup_events_on_storage(

|

||||

Event.objects.filter(pk__in=pks_to_delete).exclude(storage_backend=None)

|

||||

.values_list("id", "storage_backend")

|

||||

)

|

||||

|

||||

if is_for_project:

|

||||

# no need to update the stored_event_count for the project, because the project is being deleted

|

||||

return

|

||||

|

||||

# Update project stored_event_count to reflect the deletion of the events. note: alternatively, we could do this

|

||||

# on issue-delete (issue.stored_event_count is known too); potato, potato though.

|

||||

# note: don't bother to do the same thing for Issue.stored_event_count, since we're in the process of deleting Issue

|

||||

Project.objects.filter(id=project_id).update(stored_event_count=F('stored_event_count') - len(pks_to_delete))

|

||||

|

||||

|

||||

def delete_deps_with_budget(project_id, referring_model, fk_name, referred_ids, budget, dep_graph, is_for_project):

|

||||

r"""

|

||||

Deletes all objects of type referring_model that refer to any of the referred_ids via fk_name.

|

||||

Returns the number of deleted objects.

|

||||

And does this recursively (i.e. if there are further dependencies, it will delete those as well).

|

||||

|

||||

Caller This Func

|

||||

| |

|

||||

V V

|

||||

<unspecified> referring_model

|

||||

^ /

|

||||

\-------fk_name----

|

||||

|

||||

referred_ids relevant_ids (deduced using a query)

|

||||

"""

|

||||

num_deleted = 0

|

||||

|

||||

# Fetch ids of referring objects and their referred ids. Note that an index of fk_name can be assumed to exist,

|

||||

# because fk_name is a ForeignKey field, and Django automatically creates an index for ForeignKey fields unless

|

||||

# instructed otherwise: https://github.com/django/django/blob/7feafd79a481/django/db/models/fields/related.py#L1025

|

||||

relevant_ids = list(

|

||||

referring_model.objects.filter(**{f"{fk_name}__in": referred_ids}).order_by(f"{fk_name}_id", 'pk').values(

|

||||

*(('pk',) + fields_for_prune_orphans(referring_model))

|

||||

)[:budget]

|

||||

)

|

||||

|

||||

if not relevant_ids:

|

||||

# we didn't find any referring objects. optimization: skip any recursion and referring_model.delete()

|

||||

return 0

|

||||

|

||||

# The recursing bit:

|

||||

for_recursion = dep_graph.get(f"{referring_model._meta.app_label}.{referring_model.__name__}", [])

|

||||

|

||||

for model_for_recursion, fk_name_for_recursion in for_recursion:

|

||||

num_deleted += delete_deps_with_budget(

|

||||

project_id,

|

||||

model_for_recursion,

|

||||

fk_name_for_recursion,

|

||||

[d["pk"] for d in relevant_ids],

|

||||

budget - num_deleted,

|

||||

dep_graph,

|

||||

is_for_project,

|

||||

)

|

||||

|

||||

if num_deleted >= budget:

|

||||

return num_deleted

|

||||

|

||||

# If this point is reached: we have deleted all referring objects that we could delete, and we still have budget

|

||||

# left. We can now delete the referring objects themselves (limited by budget).

|

||||

relevant_ids_after_rec = relevant_ids[:budget - num_deleted]

|

||||

|

||||

do_pre_delete(project_id, referring_model, [d['pk'] for d in relevant_ids_after_rec], is_for_project)

|

||||

|

||||

my_num_deleted, del_d = referring_model.objects.filter(pk__in=[d['pk'] for d in relevant_ids_after_rec]).delete()

|

||||

num_deleted += my_num_deleted

|

||||

assert set(del_d.keys()) == {referring_model._meta.label} # assert no-cascading (we do that ourselves)

|

||||

|

||||

if is_for_project:

|

||||

# short-circuit: project-deletion implies "no orphans" because the project kill everything with it.

|

||||

return num_deleted

|

||||

|

||||

# Note that prune_orphans doesn't respect the budget. Reason: it's not easy to do, b/c the order is reversed (we

|

||||

# would need to predict somehow at the previous step how much budget to leave unused) and we don't care _that much_

|

||||

# about a precise budget "at the edges of our algo", as long as we don't have a "single huge blocking thing".

|

||||

prune_orphans(referring_model, relevant_ids_after_rec)

|

||||

|

||||

return num_deleted

|

||||

|

||||

@@ -1,11 +1,17 @@

|

||||

import json

|

||||

|

||||

from django.utils.html import escape, mark_safe

|

||||

from django.contrib import admin

|

||||

from django.views.decorators.csrf import csrf_protect

|

||||

from django.utils.decorators import method_decorator

|

||||

|

||||

import json

|

||||

from bugsink.transaction import immediate_atomic

|

||||

|

||||

from projects.admin import ProjectFilter

|

||||

from .models import Event

|

||||

|

||||

csrf_protect_m = method_decorator(csrf_protect)

|

||||

|

||||

|

||||

@admin.register(Event)

|

||||

class EventAdmin(admin.ModelAdmin):

|

||||

@@ -90,3 +96,28 @@ class EventAdmin(admin.ModelAdmin):

|

||||

|

||||

def on_site(self, obj):

|

||||

return mark_safe('<a href="' + escape(obj.get_absolute_url()) + '">View</a>')

|

||||

|

||||

def get_deleted_objects(self, objs, request):

|

||||

to_delete = list(objs) + ["...all its related objects... (delayed)"]

|

||||

model_count = {

|

||||

Event: len(objs),

|

||||

}

|

||||

perms_needed = set()

|

||||

protected = []

|

||||

return to_delete, model_count, perms_needed, protected

|

||||

|

||||

def delete_queryset(self, request, queryset):

|

||||

# NOTE: not the most efficient; it will do for a first version.

|

||||

with immediate_atomic():

|

||||

for obj in queryset:

|

||||

obj.delete_deferred()

|

||||

|

||||

def delete_model(self, request, obj):

|

||||

with immediate_atomic():

|

||||

obj.delete_deferred()

|

||||

|

||||

@csrf_protect_m

|

||||

def delete_view(self, request, object_id, extra_context=None):

|

||||

# the superclass version, but with the transaction.atomic context manager commented out (we do this ourselves)

|

||||

# with transaction.atomic(using=router.db_for_write(self.model)):

|

||||

return self._delete_view(request, object_id, extra_context)

|

||||

|

||||

@@ -43,11 +43,24 @@ def create_event(project=None, issue=None, timestamp=None, event_data=None):

|

||||

)

|

||||

|

||||

|

||||

def create_event_data():

|

||||

def create_event_data(exception_type=None):

|

||||

# create minimal event data that is valid as per from_json()

|

||||

|

||||

return {

|

||||

result = {

|

||||

"event_id": uuid.uuid4().hex,

|

||||

"timestamp": timezone.now().isoformat(),

|

||||

"platform": "python",

|

||||

}

|

||||

|

||||

if exception_type is not None:

|

||||

# allow for a specific exception type to get unique groupers/issues

|

||||

result["exception"] = {

|

||||

"values": [

|

||||

{

|

||||

"type": exception_type,

|

||||

"value": "This is a test exception",

|

||||

}

|

||||

]

|

||||

}

|

||||

|

||||

return result

|

||||

|

||||

@@ -23,7 +23,7 @@ def _delete_for_missing_fk(clazz, field_name):

|

||||

|

||||

## Dangling FKs:

|

||||

Non-existing objects may come into being when people muddle in the database directly with foreign key checks turned

|

||||

off (note that fk checks are turned off by default in SQLite for backwards compatibility reasons).

|

||||

off (note that fk checks are turned off by default in sqlite's CLI for backwards compatibility reasons).

|

||||

|

||||

In the future it's further possible that there will be pieces the actual Bugsink code where FK-checks are turned off

|

||||

temporarily (e.g. when deleting a project with very many related objects). (In March 2025 there was no such code

|

||||

@@ -76,6 +76,8 @@ def make_consistent():

|

||||

|

||||

_delete_for_missing_fk(Release, 'project')

|

||||

|

||||

_delete_for_missing_fk(EventTag, 'issue') # See #132 for the ordering of this statement

|

||||

|

||||

_delete_for_missing_fk(Event, 'project')

|

||||

_delete_for_missing_fk(Event, 'issue')

|

||||

|

||||

|

||||

@@ -0,0 +1,34 @@

|

||||

# Generated by Django 4.2.21 on 2025-07-03 08:30

|

||||

|

||||

from django.db import migrations

|

||||

|

||||

|

||||

def remove_events_with_null_fks(apps, schema_editor):

|

||||

# Up until now, we have various models w/ .issue=FK(null=True, on_delete=models.SET_NULL)

|

||||

# Although it is "not expected" in the interface, issue-deletion would have led to those

|

||||

# objects with a null issue. We're about to change that to .issue=FK(null=False, ...) which

|

||||

# would crash if we don't remove those objects first. Object-removal is "fine" though, because

|

||||

# as per the meaning of the SET_NULL, these objects were "dangling" anyway.

|

||||

|

||||

Event = apps.get_model("events", "Event")

|

||||

|

||||

Event.objects.filter(issue__isnull=True).delete()

|

||||

|

||||

# overcomplete b/c .issue would imply this, done anyway in the name of "defensive programming"

|

||||

Event.objects.filter(grouping__isnull=True).delete()

|

||||

|

||||

|

||||

class Migration(migrations.Migration):

|

||||

|

||||

dependencies = [

|

||||

("events", "0019_event_storage_backend"),

|

||||

|

||||

# "in principle" order shouldn't matter, because the various objects that are being deleted here are all "fully

|

||||

# contained" by the .issue; to be safe, however, we depend on the below, because of Grouping.objects.delete()

|

||||

# (which would set Event.grouping=NULL, which the present migration takes into account).

|

||||

("issues", "0020_remove_objects_with_null_issue"),

|

||||

]

|

||||

|

||||

operations = [

|

||||

migrations.RunPython(remove_events_with_null_fks, reverse_code=migrations.RunPython.noop),

|

||||

]

|

||||

27

events/migrations/0021_alter_do_nothing.py

Normal file

27

events/migrations/0021_alter_do_nothing.py

Normal file

@@ -0,0 +1,27 @@

|

||||

from django.db import migrations, models

|

||||

import django.db.models.deletion

|

||||

|

||||

|

||||

class Migration(migrations.Migration):

|

||||

|

||||

dependencies = [

|

||||

("issues", "0021_alter_do_nothing"),

|

||||

("events", "0020_remove_events_with_null_issue_or_grouping"),

|

||||

]

|

||||

|

||||

operations = [

|

||||

migrations.AlterField(

|

||||

model_name="event",

|

||||

name="grouping",

|

||||

field=models.ForeignKey(

|

||||

on_delete=django.db.models.deletion.DO_NOTHING, to="issues.grouping"

|

||||

),

|

||||

),

|

||||

migrations.AlterField(

|

||||

model_name="event",

|

||||

name="issue",

|

||||

field=models.ForeignKey(

|

||||

on_delete=django.db.models.deletion.DO_NOTHING, to="issues.issue"

|

||||

),

|

||||

),

|

||||

]

|

||||

21

events/migrations/0022_alter_event_project.py

Normal file

21

events/migrations/0022_alter_event_project.py

Normal file

@@ -0,0 +1,21 @@

|

||||

from django.db import migrations, models

|

||||

import django.db.models.deletion

|

||||

|

||||

|

||||

class Migration(migrations.Migration):

|

||||

|

||||

dependencies = [

|

||||

# Django came up with 0014, whatever the reason, I'm sure that 0013 is at least required (as per comments there)

|

||||

("projects", "0014_alter_projectmembership_project"),

|

||||

("events", "0021_alter_do_nothing"),

|

||||

]

|

||||

|

||||

operations = [

|

||||

migrations.AlterField(

|

||||

model_name="event",

|

||||

name="project",

|

||||

field=models.ForeignKey(

|

||||

on_delete=django.db.models.deletion.DO_NOTHING, to="projects.project"

|

||||

),

|

||||

),

|

||||

]

|

||||

@@ -8,12 +8,15 @@ from django.utils.functional import cached_property

|

||||

|

||||

from projects.models import Project

|

||||

from compat.timestamp import parse_timestamp

|

||||

from bugsink.transaction import delay_on_commit

|

||||

|

||||

from issues.utils import get_title_for_exception_type_and_value

|

||||

|

||||

from .retention import get_random_irrelevance

|

||||

from .storage_registry import get_write_storage, get_storage

|

||||

|

||||

from .tasks import delete_event_deps

|

||||

|

||||

|

||||

class Platform(models.TextChoices):

|

||||

AS3 = "as3"

|

||||

@@ -72,11 +75,8 @@ class Event(models.Model):

|

||||

ingested_at = models.DateTimeField(blank=False, null=False)

|

||||

digested_at = models.DateTimeField(db_index=True, blank=False, null=False)

|

||||

|

||||

# not actually expected to be null, but we want to be able to delete issues without deleting events (cleanup later)

|

||||

issue = models.ForeignKey("issues.Issue", blank=False, null=True, on_delete=models.SET_NULL)

|

||||

|

||||

# not actually expected to be null

|

||||

grouping = models.ForeignKey("issues.Grouping", blank=False, null=True, on_delete=models.SET_NULL)

|

||||

issue = models.ForeignKey("issues.Issue", blank=False, null=False, on_delete=models.DO_NOTHING)

|

||||

grouping = models.ForeignKey("issues.Grouping", blank=False, null=False, on_delete=models.DO_NOTHING)

|

||||

|

||||

# The docs say:

|

||||

# > Required. Hexadecimal string representing a uuid4 value. The length is exactly 32 characters. Dashes are not

|

||||

@@ -85,7 +85,7 @@ class Event(models.Model):

|

||||

# uuid4 clientside". In any case, we just rely on the envelope's event_id (required per the envelope spec).

|

||||

# Not a primary key: events may be duplicated across projects

|

||||

event_id = models.UUIDField(primary_key=False, null=False, editable=False, help_text="As per the sent data")

|

||||

project = models.ForeignKey(Project, blank=False, null=True, on_delete=models.SET_NULL) # SET_NULL: cleanup 'later'

|

||||

project = models.ForeignKey(Project, blank=False, null=False, on_delete=models.DO_NOTHING)

|

||||

|

||||

data = models.TextField(blank=False, null=False)

|

||||

|

||||

@@ -285,3 +285,13 @@ class Event(models.Model):

|

||||

return list(

|

||||

self.tags.all().select_related("value", "value__key").order_by("value__key__key")

|

||||

)

|

||||

|

||||

def delete_deferred(self):

|

||||

"""Schedules deletion of all related objects"""

|

||||

# NOTE: for such a small closure, I couldn't be bothered to have an .is_deleted field and deal with it. (the

|

||||

# idea being that the deletion will be relatively quick anyway). We still need "something" though, since we've

|

||||

# set DO_NOTHING everywhere. An alternative would be the "full inline", i.e. delete everything right in the

|

||||

# request w/o any delay. That diverges even more from the approach for Issue/Project, making such things a

|

||||

# "design decision needed". Maybe if we get more `delete_deferred` impls. we'll have a bit more info to figure

|

||||

# out if we can harmonize on (e.g.) 2 approaches.

|

||||

delay_on_commit(delete_event_deps, str(self.project_id), str(self.id))

|

||||

|

||||

@@ -376,7 +376,7 @@ def evict_for_epoch_and_irrelevance(project, max_epoch, max_irrelevance, max_eve

|

||||

Event.objects.filter(pk__in=pks_to_delete).exclude(storage_backend=None)

|

||||

.values_list("id", "storage_backend")

|

||||

)

|

||||

issue_deletions = {

|

||||

deletions_per_issue = {

|

||||

d['issue_id']: d['count'] for d in

|

||||

Event.objects.filter(pk__in=pks_to_delete).values("issue_id").annotate(count=Count("issue_id"))}

|

||||

|

||||

@@ -387,9 +387,9 @@ def evict_for_epoch_and_irrelevance(project, max_epoch, max_irrelevance, max_eve

|

||||

nr_of_deletions = Event.objects.filter(pk__in=pks_to_delete).delete()[1].get("events.Event", 0)

|

||||

else:

|

||||

nr_of_deletions = 0

|

||||

issue_deletions = {}

|

||||

deletions_per_issue = {}

|

||||

|

||||

return EvictionCounts(nr_of_deletions, issue_deletions)

|

||||

return EvictionCounts(nr_of_deletions, deletions_per_issue)

|

||||

|

||||

|

||||

def cleanup_events_on_storage(todos):

|

||||

|

||||

53

events/tasks.py

Normal file

53

events/tasks.py

Normal file

@@ -0,0 +1,53 @@

|

||||

from snappea.decorators import shared_task

|

||||

|

||||

from bugsink.utils import get_model_topography, delete_deps_with_budget

|

||||

from bugsink.transaction import immediate_atomic, delay_on_commit

|

||||

|

||||

|

||||

@shared_task

|

||||

def delete_event_deps(project_id, event_id):

|

||||

from .models import Event # avoid circular import

|

||||

with immediate_atomic():

|

||||

# matches what we do in events/retention.py (and for which argumentation exists); in practive I have seen _much_

|

||||

# faster deletion times (in the order of .03s per task on my local laptop) when using a budget of 500, _but_

|

||||

# it's not a given those were for "expensive objects" (e.g. events); and I'd rather err on the side of caution

|

||||

# (worst case we have a bit of inefficiency; in any case this avoids hogging the global write lock / timeouts).

|

||||

budget = 500

|

||||

num_deleted = 0

|

||||

|

||||

# NOTE: for this delete_x_deps, we didn't bother optimizing the topography graph (the dependency-graph of a

|

||||

# single event is believed to be small enough to not warrent further optimization).

|

||||

dep_graph = get_model_topography()

|

||||

|

||||

for model_for_recursion, fk_name_for_recursion in dep_graph["events.Event"]:

|

||||

this_num_deleted = delete_deps_with_budget(

|

||||

project_id,

|

||||

model_for_recursion,

|

||||

fk_name_for_recursion,

|

||||

[event_id],

|

||||

budget - num_deleted,

|

||||

dep_graph,

|

||||

is_for_project=False,

|

||||

)

|

||||

|

||||

num_deleted += this_num_deleted

|

||||

|

||||

if num_deleted >= budget:

|

||||

delay_on_commit(delete_event_deps, project_id, event_id)

|

||||

return

|

||||

|

||||

if budget - num_deleted <= 0:

|

||||

# no more budget for the self-delete.

|

||||

delay_on_commit(delete_event_deps, project_id, event_id)

|

||||

|

||||

else:

|

||||

# final step: delete the event itself

|

||||

issue = Event.objects.get(pk=event_id).issue

|

||||

|

||||

Event.objects.filter(pk=event_id).delete()

|

||||

|

||||

# manual (outside of delete_deps_with_budget) b/c the special-case in that function is (ATM) specific to

|

||||

# project (it was built around Issue-deletion initially, so Issue outliving the event-deletion was not

|

||||

# part of that functionality). we might refactor this at some point.

|

||||

issue.stored_event_count -= 1

|

||||

issue.save(update_fields=["stored_event_count"])

|

||||

@@ -9,8 +9,7 @@ from django.utils import timezone

|

||||

|

||||

from bugsink.test_utils import TransactionTestCase25251 as TransactionTestCase

|

||||

from projects.models import Project, ProjectMembership

|

||||

from issues.models import Issue

|

||||

from issues.factories import denormalized_issue_fields

|

||||

from issues.factories import get_or_create_issue

|

||||

|

||||

from .factories import create_event

|

||||

from .retention import (

|

||||

@@ -28,7 +27,7 @@ class ViewTests(TransactionTestCase):

|

||||

self.user = User.objects.create_user(username='test', password='test')

|

||||

self.project = Project.objects.create()

|

||||

ProjectMembership.objects.create(project=self.project, user=self.user)

|

||||

self.issue = Issue.objects.create(project=self.project, **denormalized_issue_fields())

|

||||

self.issue, _ = get_or_create_issue(project=self.project)

|

||||

self.event = create_event(self.project, self.issue)

|

||||

self.client.force_login(self.user)

|

||||

|

||||

@@ -154,7 +153,7 @@ class RetentionTestCase(DjangoTestCase):

|

||||

digested_at = timezone.now()

|

||||

|

||||

self.project = Project.objects.create(retention_max_event_count=5)

|

||||

self.issue = Issue.objects.create(project=self.project, **denormalized_issue_fields())

|

||||

self.issue, _ = get_or_create_issue(project=self.project)

|

||||

|

||||

for digest_order in range(1, 7):

|

||||

project_stored_event_count += 1 # +1 pre-create, as in the ingestion view

|

||||

@@ -180,7 +179,7 @@ class RetentionTestCase(DjangoTestCase):

|

||||

project_stored_event_count = 0

|

||||

|

||||

self.project = Project.objects.create(retention_max_event_count=999)

|

||||

self.issue = Issue.objects.create(project=self.project, **denormalized_issue_fields())

|

||||

self.issue, _ = get_or_create_issue(project=self.project)

|

||||

|

||||

current_timestamp = datetime.datetime(2022, 1, 1, 0, 0, 0, tzinfo=datetime.timezone.utc)

|

||||

|

||||

|

||||

@@ -131,7 +131,7 @@ class BaseIngestAPIView(View):

|

||||

@classmethod

|

||||

def get_project(cls, project_pk, sentry_key):

|

||||

try:

|

||||

return Project.objects.get(pk=project_pk, sentry_key=sentry_key)

|

||||

return Project.objects.get(pk=project_pk, sentry_key=sentry_key, is_deleted=False)

|

||||

except Project.DoesNotExist:

|

||||

# We don't distinguish between "project not found" and "key incorrect"; there's no real value in that from

|

||||

# the user perspective (they deal in dsns). Additional advantage: no need to do constant-time-comp on

|

||||

@@ -251,7 +251,12 @@ class BaseIngestAPIView(View):

|

||||

ingested_at = parse_timestamp(event_metadata["ingested_at"])

|

||||

digested_at = datetime.now(timezone.utc) if digested_at is None else digested_at # explicit passing: test only

|

||||

|

||||

project = Project.objects.get(pk=event_metadata["project_id"])

|

||||

try:

|

||||

project = Project.objects.get(pk=event_metadata["project_id"], is_deleted=False)

|

||||

except Project.DoesNotExist:

|

||||

# we may get here if the project was deleted after the event was ingested, but before it was digested

|

||||

# (covers both "deletion in progress (is_deleted=True)" and "fully deleted").

|

||||

return

|

||||

|

||||

if not cls.count_project_periods_and_act_on_it(project, digested_at):

|

||||

return # if over-quota: just return (any cleanup is done calling-side)

|

||||

@@ -360,6 +365,7 @@ class BaseIngestAPIView(View):

|

||||

|

||||

if issue_created:

|

||||

TurningPoint.objects.create(

|

||||

project=project,

|

||||

issue=issue, triggering_event=event, timestamp=ingested_at,

|

||||

kind=TurningPointKind.FIRST_SEEN)

|

||||

event.never_evict = True

|

||||

@@ -371,6 +377,7 @@ class BaseIngestAPIView(View):

|

||||

# new issues cannot be regressions by definition, hence this is in the 'else' branch

|

||||

if issue_is_regression(issue, event.release):

|

||||

TurningPoint.objects.create(

|

||||

project=project,

|

||||

issue=issue, triggering_event=event, timestamp=ingested_at,

|

||||

kind=TurningPointKind.REGRESSED)

|

||||

event.never_evict = True

|

||||

|

||||

@@ -1,8 +1,14 @@

|

||||

from django.contrib import admin

|

||||

|

||||

from bugsink.transaction import immediate_atomic

|

||||

from django.utils.decorators import method_decorator

|

||||

from django.views.decorators.csrf import csrf_protect

|

||||

|

||||

from .models import Issue, Grouping, TurningPoint

|

||||

from .forms import IssueAdminForm

|

||||

|

||||

csrf_protect_m = method_decorator(csrf_protect)

|

||||

|

||||

|

||||

class GroupingInline(admin.TabularInline):

|

||||

model = Grouping

|

||||

@@ -79,3 +85,28 @@ class IssueAdmin(admin.ModelAdmin):

|

||||

'digested_event_count',

|

||||

'stored_event_count',

|

||||

]

|

||||

|

||||

def get_deleted_objects(self, objs, request):

|

||||

to_delete = list(objs) + ["...all its related objects... (delayed)"]

|

||||

model_count = {

|

||||

Issue: len(objs),

|

||||

}

|

||||

perms_needed = set()

|

||||

protected = []

|

||||

return to_delete, model_count, perms_needed, protected

|

||||

|

||||

def delete_queryset(self, request, queryset):

|

||||

# NOTE: not the most efficient; it will do for a first version.

|

||||

with immediate_atomic():

|

||||

for obj in queryset:

|

||||

obj.delete_deferred()

|

||||

|

||||

def delete_model(self, request, obj):

|

||||

with immediate_atomic():

|

||||

obj.delete_deferred()

|

||||

|

||||

@csrf_protect_m

|

||||

def delete_view(self, request, object_id, extra_context=None):

|

||||

# the superclass version, but with the transaction.atomic context manager commented out (we do this ourselves)

|

||||

# with transaction.atomic(using=router.db_for_write(self.model)):

|

||||

return self._delete_view(request, object_id, extra_context)

|

||||

|

||||

@@ -12,6 +12,7 @@ def get_or_create_issue(project=None, event_data=None):

|

||||

if event_data is None:

|

||||

from events.factories import create_event_data

|

||||

event_data = create_event_data()

|

||||

|

||||

if project is None:

|

||||

project = Project.objects.create(name="Test project")

|

||||

|

||||

|

||||

16

issues/migrations/0018_issue_is_deleted.py

Normal file

16

issues/migrations/0018_issue_is_deleted.py

Normal file

@@ -0,0 +1,16 @@

|

||||

from django.db import migrations, models

|

||||

|

||||

|

||||

class Migration(migrations.Migration):

|

||||

|

||||

dependencies = [

|

||||

("issues", "0017_issue_list_indexes_must_start_with_project"),

|

||||

]

|

||||

|

||||

operations = [

|

||||

migrations.AddField(

|

||||

model_name="issue",

|

||||

name="is_deleted",

|

||||

field=models.BooleanField(default=False),

|

||||

),

|

||||

]

|

||||

16

issues/migrations/0019_alter_grouping_grouping_key_hash.py

Normal file

16

issues/migrations/0019_alter_grouping_grouping_key_hash.py

Normal file

@@ -0,0 +1,16 @@

|

||||

from django.db import migrations, models

|

||||

|

||||

|

||||

class Migration(migrations.Migration):

|

||||

|

||||

dependencies = [

|

||||

("issues", "0018_issue_is_deleted"),

|

||||

]

|

||||

|

||||

operations = [

|

||||

migrations.AlterField(

|

||||

model_name="grouping",

|

||||

name="grouping_key_hash",

|

||||

field=models.CharField(max_length=64, null=True),

|

||||

),

|

||||

]

|

||||

26

issues/migrations/0020_remove_objects_with_null_issue.py

Normal file

26

issues/migrations/0020_remove_objects_with_null_issue.py

Normal file

@@ -0,0 +1,26 @@

|

||||

from django.db import migrations

|

||||

|

||||

|

||||

def remove_objects_with_null_issue(apps, schema_editor):

|

||||

# Up until now, we have various models w/ .issue=FK(null=True, on_delete=models.SET_NULL)

|

||||

# Although it is "not expected" in the interface, issue-deletion would have led to those

|

||||

# objects with a null issue. We're about to change that to .issue=FK(null=False, ...) which

|

||||

# would crash if we don't remove those objects first. Object-removal is "fine" though, because

|

||||

# as per the meaning of the SET_NULL, these objects were "dangling" anyway.

|

||||

|

||||

Grouping = apps.get_model("issues", "Grouping")

|

||||

TurningPoint = apps.get_model("issues", "TurningPoint")

|

||||

|

||||

Grouping.objects.filter(issue__isnull=True).delete()

|

||||

TurningPoint.objects.filter(issue__isnull=True).delete()

|

||||

|

||||

|

||||

class Migration(migrations.Migration):

|

||||

|

||||

dependencies = [

|

||||

("issues", "0019_alter_grouping_grouping_key_hash"),

|

||||

]

|

||||

|

||||

operations = [

|

||||

migrations.RunPython(remove_objects_with_null_issue, reverse_code=migrations.RunPython.noop),

|

||||

]

|

||||

26

issues/migrations/0021_alter_do_nothing.py

Normal file

26

issues/migrations/0021_alter_do_nothing.py

Normal file

@@ -0,0 +1,26 @@

|

||||

from django.db import migrations, models

|

||||

import django.db.models.deletion

|

||||

|

||||

|

||||

class Migration(migrations.Migration):

|

||||

|

||||

dependencies = [

|

||||

("issues", "0020_remove_objects_with_null_issue"),

|

||||

]

|

||||

|

||||

operations = [

|

||||

migrations.AlterField(

|

||||

model_name="grouping",

|

||||

name="issue",

|

||||

field=models.ForeignKey(

|

||||

on_delete=django.db.models.deletion.DO_NOTHING, to="issues.issue"

|

||||

),

|

||||

),

|

||||

migrations.AlterField(

|

||||

model_name="turningpoint",

|

||||

name="issue",

|

||||

field=models.ForeignKey(

|

||||

on_delete=django.db.models.deletion.DO_NOTHING, to="issues.issue"

|

||||

),

|

||||

),

|

||||

]

|

||||

22

issues/migrations/0022_turningpoint_project.py

Normal file

22

issues/migrations/0022_turningpoint_project.py

Normal file

@@ -0,0 +1,22 @@

|

||||

from django.db import migrations, models

|

||||

import django.db.models.deletion

|

||||

|

||||

|

||||

class Migration(migrations.Migration):

|

||||

|

||||

dependencies = [

|

||||

("projects", "0012_project_is_deleted"),

|

||||

("issues", "0021_alter_do_nothing"),

|

||||

]

|

||||

|

||||

operations = [

|

||||

migrations.AddField(

|

||||

model_name="turningpoint",

|

||||

name="project",

|

||||

field=models.ForeignKey(

|

||||

null=True,

|

||||

on_delete=django.db.models.deletion.DO_NOTHING,

|

||||

to="projects.project",

|

||||

),

|

||||

),

|

||||

]

|

||||

36

issues/migrations/0023_turningpoint_set_project.py

Normal file

36

issues/migrations/0023_turningpoint_set_project.py

Normal file

@@ -0,0 +1,36 @@

|

||||

from django.db import migrations

|

||||

|

||||

|

||||

def turningpoint_set_project(apps, schema_editor):

|

||||

TurningPoint = apps.get_model("issues", "TurningPoint")

|

||||

|

||||

# TurningPoint.objects.update(project=F("issue__project"))

|

||||

# fails with 'Joined field references are not permitted in this query"

|

||||

|

||||

# This one's elegant and works in sqlite but not in MySQL:

|

||||

# TurningPoint.objects.update(

|

||||

# project=Subquery(

|

||||

# TurningPoint.objects

|

||||

# .filter(pk=OuterRef('pk'))

|

||||

# .values('issue__project')[:1]

|

||||

# )

|

||||

# )

|

||||

# django.db.utils.OperationalError: (1093, "You can't specify target table 'issues_turningpoint' for update in FROM

|

||||

# clause")

|

||||

|

||||

# so in the end we'll just loop:

|

||||

|

||||

for turningpoint in TurningPoint.objects.all():

|

||||

turningpoint.project = turningpoint.issue.project

|

||||

turningpoint.save(update_fields=["project"])

|

||||

|

||||

|

||||

class Migration(migrations.Migration):

|

||||

|

||||

dependencies = [

|

||||

("issues", "0022_turningpoint_project"),

|

||||

]

|

||||

|

||||

operations = [

|

||||

migrations.RunPython(turningpoint_set_project, migrations.RunPython.noop),

|

||||

]

|

||||

@@ -0,0 +1,20 @@

|

||||

from django.db import migrations, models

|

||||

import django.db.models.deletion

|

||||

|

||||

|

||||

class Migration(migrations.Migration):

|

||||

|

||||

dependencies = [

|

||||

("projects", "0012_project_is_deleted"),

|

||||

("issues", "0023_turningpoint_set_project"),

|

||||

]

|

||||

|

||||

operations = [

|

||||

migrations.AlterField(

|

||||

model_name="turningpoint",

|

||||

name="project",

|

||||

field=models.ForeignKey(

|

||||

on_delete=django.db.models.deletion.DO_NOTHING, to="projects.project"

|

||||

),

|

||||

),

|

||||

]

|

||||

@@ -0,0 +1,28 @@

|

||||

from django.db import migrations, models

|

||||

import django.db.models.deletion

|

||||

|

||||

|

||||

class Migration(migrations.Migration):

|

||||

|

||||

dependencies = [

|

||||

# Django came up with 0014, whatever the reason, I'm sure that 0013 is at least required (as per comments there)

|

||||

("projects", "0014_alter_projectmembership_project"),

|

||||

("issues", "0024_turningpoint_project_alter_not_null"),

|

||||

]

|

||||

|

||||

operations = [

|

||||

migrations.AlterField(

|

||||

model_name="grouping",

|

||||

name="project",

|

||||

field=models.ForeignKey(

|

||||

on_delete=django.db.models.deletion.DO_NOTHING, to="projects.project"

|

||||

),

|

||||

),

|

||||

migrations.AlterField(

|

||||

model_name="issue",

|

||||

name="project",

|

||||

field=models.ForeignKey(

|

||||

on_delete=django.db.models.deletion.DO_NOTHING, to="projects.project"

|

||||

),

|

||||

),

|

||||

]

|

||||

@@ -10,6 +10,7 @@ from django.conf import settings

|

||||

from django.utils.functional import cached_property

|

||||

|

||||

from bugsink.volume_based_condition import VolumeBasedCondition

|

||||

from bugsink.transaction import delay_on_commit

|

||||

from alerts.tasks import send_unmute_alert

|

||||

from compat.timestamp import parse_timestamp, format_timestamp

|

||||

from tags.models import IssueTag, TagValue

|

||||

@@ -18,6 +19,8 @@ from .utils import (

|

||||

parse_lines, serialize_lines, filter_qs_for_fixed_at, exclude_qs_for_fixed_at,

|

||||

get_title_for_exception_type_and_value)

|

||||

|

||||

from .tasks import delete_issue_deps

|

||||

|

||||

|

||||

class IncongruentStateException(Exception):

|

||||

pass

|

||||

@@ -32,7 +35,9 @@ class Issue(models.Model):

|

||||

id = models.UUIDField(primary_key=True, default=uuid.uuid4, editable=False)

|

||||

|

||||

project = models.ForeignKey(

|

||||

"projects.Project", blank=False, null=True, on_delete=models.SET_NULL) # SET_NULL: cleanup 'later'

|

||||

"projects.Project", blank=False, null=False, on_delete=models.DO_NOTHING)

|

||||

|

||||

is_deleted = models.BooleanField(default=False)

|

||||

|

||||

# 1-based for the same reasons as Event.digest_order

|

||||

digest_order = models.PositiveIntegerField(blank=False, null=False)

|

||||

@@ -72,6 +77,21 @@ class Issue(models.Model):

|

||||

self.digest_order = max_current + 1 if max_current is not None else 1

|

||||

super().save(*args, **kwargs)

|

||||

|

||||

def delete_deferred(self):

|

||||

"""Marks the issue as deleted, and schedules deletion of all related objects"""

|

||||

self.is_deleted = True

|

||||

self.save(update_fields=["is_deleted"])

|

||||

|

||||

# we set grouping_key_hash to None to ensure that event digests that happen simultaneously with the delayed

|

||||

# cleanup will get their own fresh Grouping and hence Issue. This matches with the behavior that would happen

|

||||

# if Issue deletion would have been instantaneous (i.e. it's the least surprising behavior).

|

||||

#

|

||||

# `issue=None` is explicitly _not_ part of this update, such that the actual deletion of the Groupings will be

|

||||

# picked up as part of the delete_issue_deps task.

|

||||

self.grouping_set.all().update(grouping_key_hash=None)

|

||||

|

||||

delay_on_commit(delete_issue_deps, str(self.project_id), str(self.id))

|

||||

|

||||

def friendly_id(self):

|

||||

return f"{ self.project.slug.upper() }-{ self.digest_order }"

|

||||

|

||||

@@ -193,14 +213,14 @@ class Grouping(models.Model):

|

||||

into a single issue. (such manual merging is not yet implemented, but the data-model is already prepared for it)

|

||||

"""

|

||||

project = models.ForeignKey(

|

||||

"projects.Project", blank=False, null=True, on_delete=models.SET_NULL) # SET_NULL: cleanup 'later'

|

||||

"projects.Project", blank=False, null=False, on_delete=models.DO_NOTHING)

|

||||

|

||||

grouping_key = models.TextField(blank=False, null=False)

|

||||

|

||||

# we hash the key to make it indexable on MySQL, see https://code.djangoproject.com/ticket/2495

|

||||

grouping_key_hash = models.CharField(max_length=64, blank=False, null=False)

|

||||

grouping_key_hash = models.CharField(max_length=64, blank=False, null=True)

|

||||

|

||||

issue = models.ForeignKey("Issue", blank=False, null=True, on_delete=models.SET_NULL) # SET_NULL: cleanup 'later'

|

||||

issue = models.ForeignKey("Issue", blank=False, null=False, on_delete=models.DO_NOTHING)

|

||||

|

||||

def __str__(self):

|

||||

return self.grouping_key

|

||||

@@ -328,10 +348,15 @@ class IssueStateManager(object):

|

||||

# path is never reached via UI-based paths (because those are by definition not event-triggered); thus

|

||||

# the 2 ways of creating TurningPoints do not collide.

|

||||

TurningPoint.objects.create(

|

||||

project_id=issue.project_id,

|

||||

issue=issue, triggering_event=triggering_event, timestamp=triggering_event.ingested_at,

|

||||

kind=TurningPointKind.UNMUTED, metadata=json.dumps(unmute_metadata))

|

||||

triggering_event.never_evict = True # .save() will be called by the caller of this function

|

||||

|

||||

@staticmethod

|

||||

def delete(issue):

|

||||

issue.delete_deferred()

|

||||

|

||||

@staticmethod

|

||||

def get_unmute_thresholds(issue):

|

||||

unmute_vbcs = [

|

||||

@@ -450,6 +475,11 @@ class IssueQuerysetStateManager(object):

|

||||

for issue in issue_qs:

|

||||

IssueStateManager.unmute(issue, triggering_event)

|

||||

|

||||

@staticmethod

|

||||

def delete(issue_qs):

|

||||

for issue in issue_qs:

|

||||

issue.delete_deferred()

|

||||

|

||||

|

||||

class TurningPointKind(models.IntegerChoices):

|

||||

# The language of the kinds reflects a historic view of the system, e.g. "first seen" as opposed to "new issue"; an

|

||||

@@ -471,7 +501,8 @@ class TurningPoint(models.Model):

|

||||

# basically: an Event, but that name was already taken in our system :-) alternative names I considered:

|

||||

# "milestone", "state_change", "transition", "annotation", "episode"

|

||||

|

||||

issue = models.ForeignKey("Issue", blank=False, null=True, on_delete=models.SET_NULL) # SET_NULL: cleanup 'later'

|

||||

project = models.ForeignKey("projects.Project", blank=False, null=False, on_delete=models.DO_NOTHING)

|

||||

issue = models.ForeignKey("Issue", blank=False, null=False, on_delete=models.DO_NOTHING)

|

||||

triggering_event = models.ForeignKey("events.Event", blank=True, null=True, on_delete=models.DO_NOTHING)

|

||||

|

||||

# null: the system-user

|

||||

|

||||

82

issues/tasks.py

Normal file

82

issues/tasks.py

Normal file

@@ -0,0 +1,82 @@

|

||||

from snappea.decorators import shared_task

|

||||

|

||||

from bugsink.utils import get_model_topography, delete_deps_with_budget

|

||||

from bugsink.transaction import immediate_atomic, delay_on_commit

|

||||

|

||||

|

||||

def get_model_topography_with_issue_override():

|

||||

"""

|

||||

Returns the model topography with ordering adjusted to prefer deletions via .issue, when available.

|

||||

|

||||

This assumes that Issue is not only the root of the dependency graph, but also that if a model has an .issue

|

||||

ForeignKey, deleting it via that path is sufficient, meaning we can safely avoid visiting the same model again

|

||||

through other ForeignKey routes (e.g. Event.grouping or TurningPoint.triggering_event).

|

||||

|

||||

The preference is encoded via an explicit list of models, which are visited early and only via their .issue path.

|

||||

"""

|

||||

from issues.models import TurningPoint, Grouping

|

||||

from events.models import Event

|

||||

from tags.models import IssueTag, EventTag

|

||||

|

||||

preferred = [

|

||||

TurningPoint, # above Event, to avoid deletions via .triggering_event

|

||||

EventTag, # above Event, to avoid deletions via .event

|

||||

Event, # above Grouping, to avoid deletions via .grouping

|

||||

Grouping,

|

||||

IssueTag,

|

||||

]

|

||||

|

||||

def as_preferred(lst):

|

||||

"""

|

||||

Sorts the list of (model, fk_name) tuples such that the models are in the preferred order as indicated above,

|

||||

and models which occur with another fk_name are pruned

|

||||

"""

|

||||

return sorted(

|

||||

[(model, fk_name) for model, fk_name in lst if fk_name == "issue" or model not in preferred],

|

||||

key=lambda x: preferred.index(x[0]) if x[0] in preferred else len(preferred),

|

||||

)

|

||||

|

||||

topo = get_model_topography()

|

||||

for k, lst in topo.items():

|

||||

topo[k] = as_preferred(lst)

|

||||

|

||||

return topo

|

||||

|

||||

|

||||

@shared_task

|

||||

def delete_issue_deps(project_id, issue_id):

|

||||

from .models import Issue # avoid circular import

|

||||

with immediate_atomic():

|

||||

# matches what we do in events/retention.py (and for which argumentation exists); in practive I have seen _much_

|

||||

# faster deletion times (in the order of .03s per task on my local laptop) when using a budget of 500, _but_

|

||||

# it's not a given those were for "expensive objects" (e.g. events); and I'd rather err on the side of caution

|

||||

# (worst case we have a bit of inefficiency; in any case this avoids hogging the global write lock / timeouts).

|

||||

budget = 500

|

||||

num_deleted = 0

|

||||

|

||||

dep_graph = get_model_topography_with_issue_override()

|

||||

|

||||

for model_for_recursion, fk_name_for_recursion in dep_graph["issues.Issue"]:

|

||||

this_num_deleted = delete_deps_with_budget(

|

||||

project_id,

|

||||

model_for_recursion,

|

||||

fk_name_for_recursion,

|

||||

[issue_id],

|

||||

budget - num_deleted,

|

||||

dep_graph,

|

||||

is_for_project=False,

|

||||

)

|

||||

|

||||

num_deleted += this_num_deleted

|

||||

|

||||

if num_deleted >= budget:

|

||||

delay_on_commit(delete_issue_deps, project_id, issue_id)

|

||||

return

|

||||

|

||||

if budget - num_deleted <= 0:

|

||||

# no more budget for the self-delete.

|

||||

delay_on_commit(delete_issue_deps, project_id, issue_id)

|

||||

|

||||

else:

|

||||

# final step: delete the issue itself

|

||||

Issue.objects.filter(pk=issue_id).delete()

|

||||

@@ -7,6 +7,23 @@

|

||||

|

||||

{% block content %}

|

||||

|

||||

<!-- Delete Confirmation Modal -->

|

||||

<div id="deleteModal" class="hidden fixed inset-0 bg-slate-600 bg-opacity-50 overflow-y-auto h-full w-full z-50 flex items-center justify-center">

|

||||

<div class="relative p-6 border border-slate-300 w-96 shadow-lg rounded-md bg-white">

|

||||

<div class="text-center m-4">

|

||||

<h3 class="text-2xl font-semibold text-slate-800 mt-3 mb-4">Delete Issues</h3>

|

||||

<div class="mt-4 mb-6">

|

||||

<p class="text-slate-700">

|

||||

Deleting an Issue is a permanent action and cannot be undone. It's typically better to resolve or mute an issue instead of deleting it, as this allows you to keep track of past issues and their resolutions.

|

||||

</p>

|

||||

</div>

|

||||

<div class="flex items-center justify-center space-x-4 mb-4">

|

||||

<button id="cancelDelete" class="text-cyan-500 font-bold">Cancel</button>

|

||||

<button id="confirmDelete" type="submit" class="font-bold py-2 px-4 rounded bg-red-500 text-white border-2 border-red-600 hover:bg-red-600 active:ring">Delete</button>

|

||||

</div>

|

||||

</div>

|

||||

</div>

|

||||

</div>

|

||||

|

||||

|

||||

<div class="m-4">

|

||||

@@ -35,7 +52,7 @@

|

||||

|

||||

<div>

|

||||

|

||||

<form action="." method="post">

|

||||

<form action="." method="post" id="issueForm">

|

||||

{% csrf_token %}

|

||||

|

||||

<table class="w-full">

|

||||

@@ -122,8 +139,18 @@

|

||||